了解HDFS命令、Hadoop、Spark SQL、SQL查询、ETL和数据分析| Spark Hadoop集群虚拟机|完全解决的问题

你会学到什么

作为本课程的一部分,学生将获得在Spark Hadoop环境中工作的实践经验,该环境是免费且可下载的。

学生将有机会在沙箱环境中使用Hadoop集群上的Spark解决数据工程和数据分析问题

发布HDFS命令。

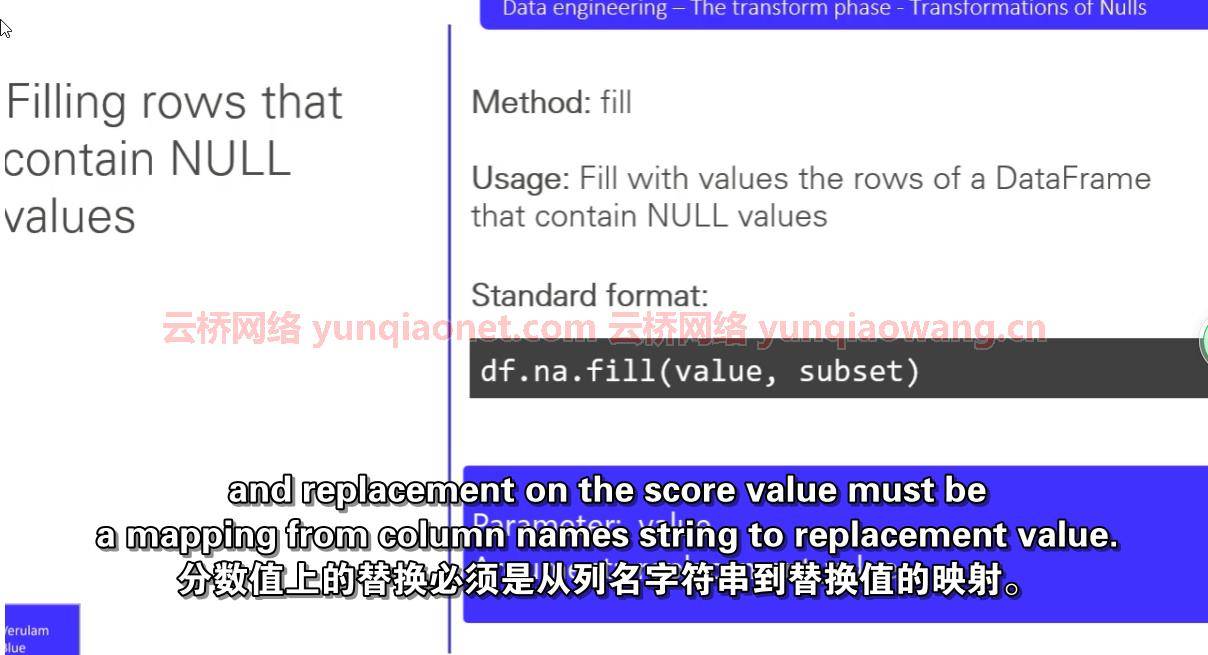

将存储在HDFS的一组给定格式的数据值转换为新的数据值或新的数据格式,并将其写入HDFS。

从HDFS加载数据用于Spark应用&使用Spark将结果写回HDFS。

以各种文件格式读写文件。

使用Spark API对数据执行标准的提取、转换、加载(ETL)过程。

使用metastore表作为Spark应用程序的输入源或输出接收器。

在Spark中应用查询数据集的基础知识。

使用Spark过滤数据。

编写计算聚合统计信息的查询。

使用Spark连接不同的数据集。

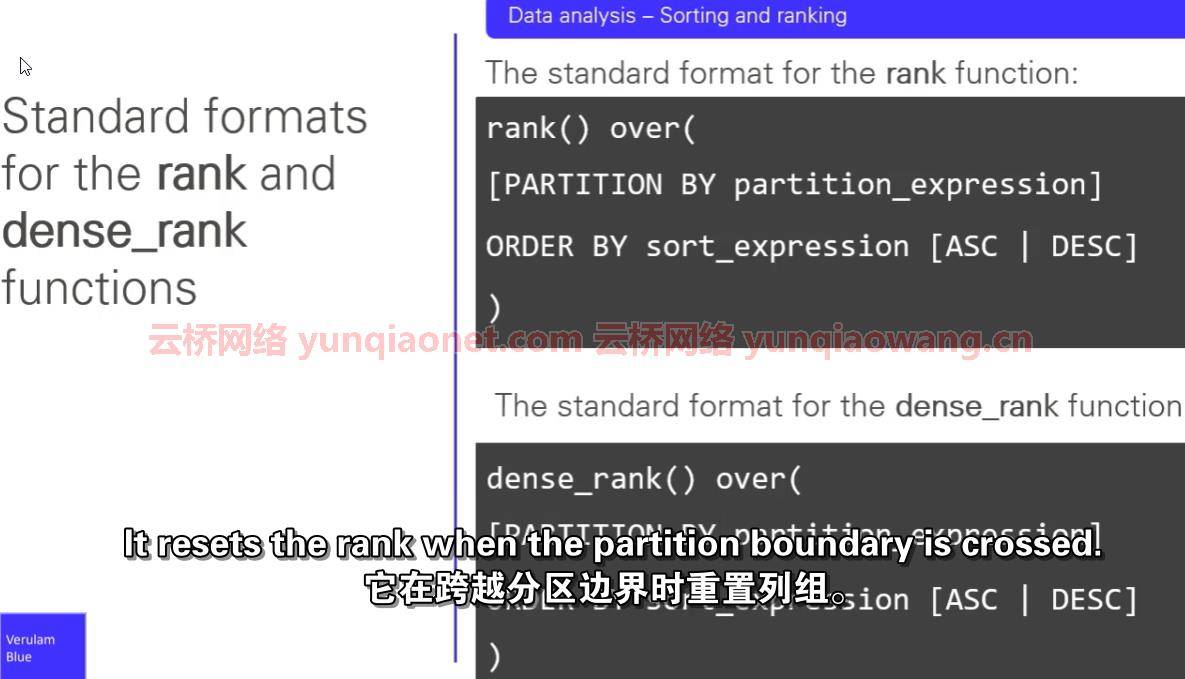

产生分级或分类的数据。

流派:电子学习| MP4 |视频:h264,1280×720 |音频:AAC,44.1 KHz

语言:英语+中英文字幕(云桥网络 机译)|大小解压后:8..37GB 含课程文件 |时长:5h 37m

Spark SQL & Hadoop (For Data Scientists & Big Data Analysts)

描述

Apache Spark是目前最流行的大数据处理系统之一。

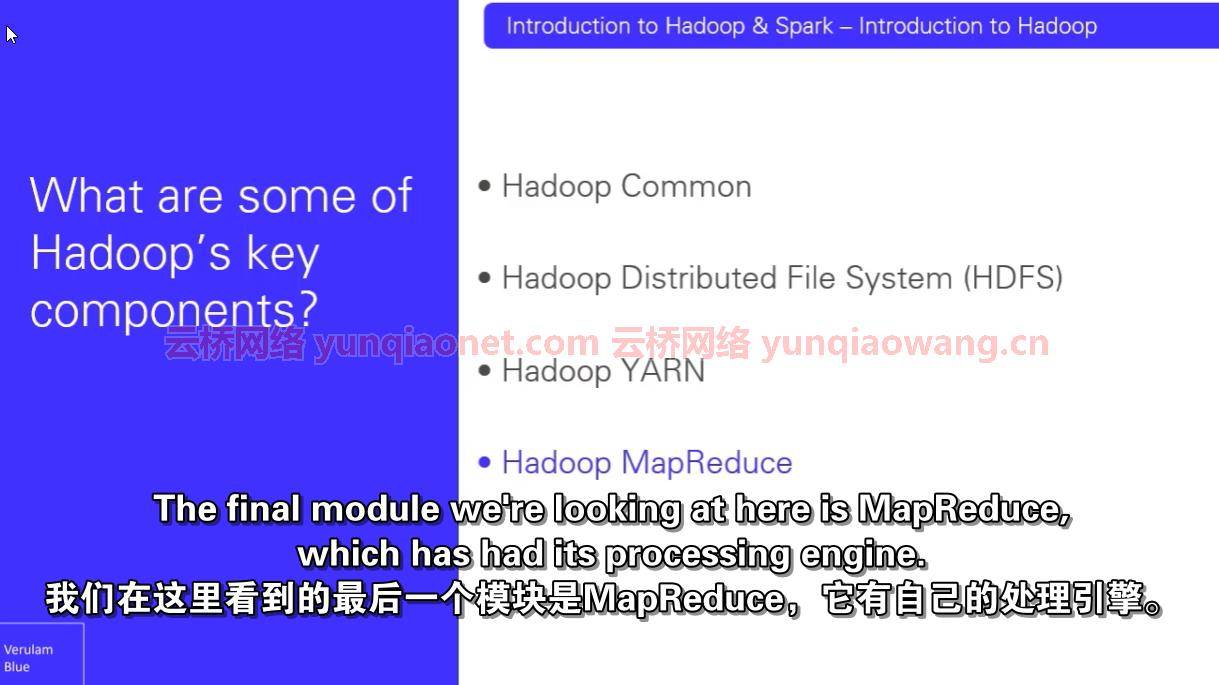

许多希望在本地存储数据的组织继续使用Apache Hadoop。Hadoop允许这些组织高效地存储从千兆字节到千兆字节的大数据集。

随着数据科学、大数据分析和数据工程职位空缺数量的持续增长,对具备Spark和Hadoop技术知识的个人填补这些空缺的需求也将持续增长。

本课程专为希望利用Hadoop和Apache Spark的力量来理解大数据的数据科学家、大数据分析师和数据工程师设计。

本课程将帮助那些希望交互式分析大数据或开始编写生产应用程序的人准备数据,以便在Hadoop环境中使用火花SQL进行进一步分析。

该课程也非常适合希望接触Spark & Hadoop的大学生和应届毕业生,或者只想在使用Spark-SQL的大数据环境中应用自己的SQL技能的任何人。

本课程旨在简明扼要,并为学生提供必要和足够的理论,足以让他们能够使用Hadoop & Spark,而不会陷入太多关于RDDs等旧的低级APIs的理论。

在解决本课程中包含的问题时,学生将开始发展这些技能&处理生产环境中出现的真实场景所需的信心。

(一)这门课程的问题不到30个。这些包括hdfs命令、基本数据工程任务和数据分析。

全面解决所有问题。

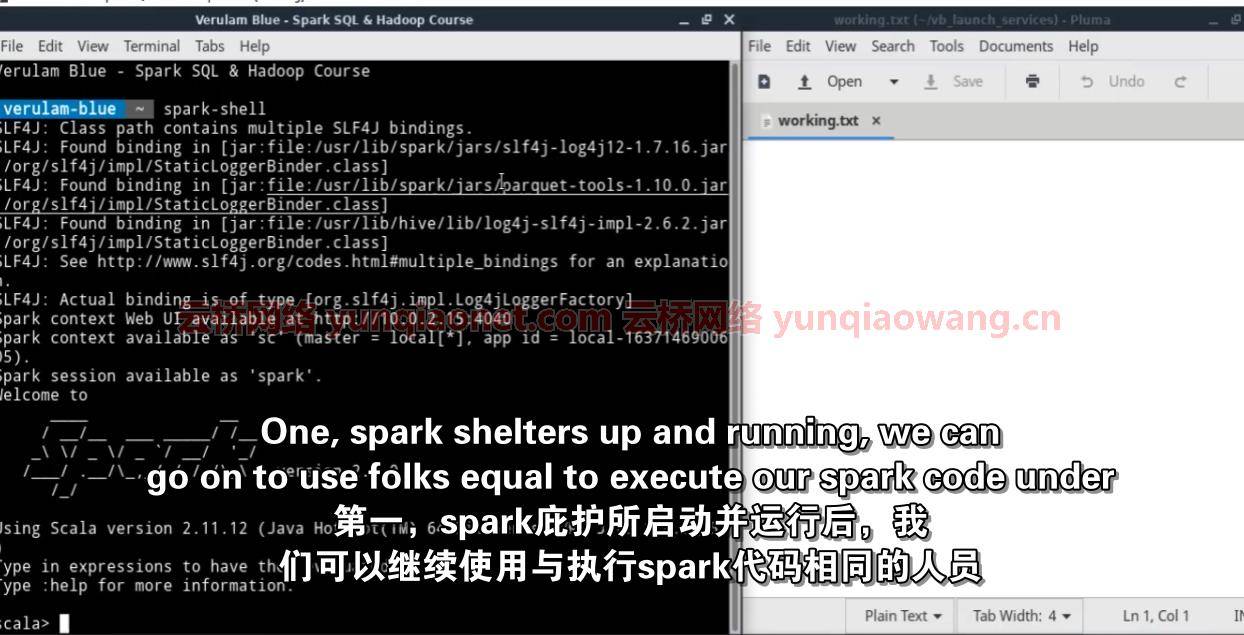

(c)还包括Verulam Blue虚拟机,这是一个已经安装了spark Hadoop集群的环境,以便您可以练习解决问题。

该虚拟机包含一个Spark Hadoop环境,该环境允许学生读写Hadoop文件系统中的数据,并将元存储表存储在Hive元存储上。

学生解决问题所需的所有数据集都已经加载到HDFS上,所以学生不需要做任何额外的工作。

虚拟机还安装了阿帕奇齐柏林飞艇。这是一款专门针对Spark的笔记本,类似于Python的Jupyter笔记本。

本课程将允许学生在实践过程中获得在Spark Hadoop环境中工作的实践经验

将存储在HDFS的一组给定格式的数据值转换为新的数据值或新的数据格式,并将其写入HDFS。

从HDFS加载数据用于Spark应用&使用Spark将结果写回HDFS。

以各种文件格式读写文件。

使用Spark API对数据执行标准的提取、转换、加载(ETL)过程。

使用metastore表作为Spark应用程序的输入源或输出接收器。

在Spark中应用查询数据集的基础知识。

使用Spark过滤数据。

编写计算聚合统计信息的查询。

使用Spark连接不同的数据集。

产生分级或分类的数据。

这门课是给谁的

本课程专为希望利用Hadoop和Apache Spark的力量来理解大数据的数据科学家、大数据分析师和数据工程师设计。

这门课程也非常适合大学生和刚毕业的学生,他们渴望在一家希望填补大数据相关职位的公司找到工作,或者任何只想在使用Spark-SQL的大数据环境中应用他们的SQL技能的人。

希望进入数据工程领域的软件工程师和开发人员也会发现本课程很有帮助。

Genre: eLearning | MP4 | Video: h264, 1280×720 | Audio: AAC, 44.1 KHz

Language: English | Size: 8..23 GB | Duration: 5h 37m

Learn HDFS commands, Hadoop, Spark SQL, SQL Queries, ETL & Data Analysis| Spark Hadoop Cluster VM | Fully Solved Qs

What you’ll learn

Students will get hands-on experience working in a Spark Hadoop environment that’s free and downloadable as part of this course.

Students will have opportunities solve Data Engineering and Data Analysis Problems using Spark on a Hadoop cluster in the sandbox environment that comes as part

Issuing HDFS commands.

Converting a set of data values in a given format stored in HDFS into new data values or a new data format and writing them into HDFS.

Loading data from HDFS for use in Spark applications & writing the results back into HDFS using Spark.

Reading and writing files in a variety of file formats.

Performing standard extract, transform, load (ETL) processes on data using the Spark API.

Using metastore tables as an input source or an output sink for Spark applications.

Applying the understanding of the fundamentals of querying datasets in Spark.

Filtering data using Spark.

Writing queries that calculate aggregate statistics.

Joining disparate datasets using Spark.

Producing ranked or sorted data.

Description

Apache Spark is currently one of the most popular systems for processing big data.

Apache Hadoop continues to be used by many organizations that look to store data locally on premises. Hadoop allows these organisations to efficiently store big datasets ranging in size from gigabytes to petabytes.

As the number of vacancies for data science, big data analysis and data engineering roles continue to grow, so too will the demand for individuals that possess knowledge of Spark and Hadoop technologies to fill these vacancies.

This course has been designed specifically for data scientists, big data analysts and data engineers looking to leverage the power of Hadoop and Apache Spark to make sense of big data.

This course will help those individuals that are looking to interactively analyse big data or to begin writing production applications to prepare data for further analysis using Spark SQL in a Hadoop environment.

The course is also well suited for university students and recent graduates that are keen to gain exposure to Spark & Hadoop or anyone who simply wants to apply their SQL skills in a big data environment using Spark-SQL.

This course has been designed to be concise and to provide students with a necessary and sufficient amount of theory, enough for them to be able to use Hadoop & Spark without getting bogged down in too much theory about older low-level APIs such as RDDs.

On solving the questions contained in this course students will begin to develop those skills & the confidence needed to handle real world scenarios that come their way in a production environment.

(a) There are just under 30 problems in this course. These cover hdfs commands, basic data engineering tasks and data analysis.

(b) Fully worked out solutions to all the problems.

(c) Also included is the Verulam Blue virtual machine which is an environment that has a spark Hadoop cluster already installed so that you can practice working on the problems.

The VM contains a Spark Hadoop environment which allows students to read and write data to & from the Hadoop file system as well as to store metastore tables on the Hive metastore.

All the datasets students will need for the problems are already loaded onto HDFS, so there is no need for students to do any extra work.

The VM also has Apache Zeppelin installed. This is a notebook specific to Spark and is similar to Python’s Jupyter notebook.

This course will allow students to get hands-on experience working in a Spark Hadoop environment as they practice

Converting a set of data values in a given format stored in HDFS into new data values or a new data format and writing them into HDFS.

Loading data from HDFS for use in Spark applications & writing the results back into HDFS using Spark.

Reading and writing files in a variety of file formats.

Performing standard extract, transform, load (ETL) processes on data using the Spark API.

Using metastore tables as an input source or an output sink for Spark applications.

Applying the understanding of the fundamentals of querying datasets in Spark.

Filtering data using Spark.

Writing queries that calculate aggregate statistics.

Joining disparate datasets using Spark.

Producing ranked or sorted data.

Who this course is for

This course has been designed specifically for data scientists, big data analysts and data engineers looking to leverage the power of Hadoop and Apache Spark to make sense of big data.

This course is also well suited for university students and recent graduates that are keen to land a job with a company that’s looking to fill a big data-related positions or anyone who simply wants to apply their SQL skills in a big data environment using Spark-SQL.

Software engineers & developers who are looking to break into the Data Engineering field will also find this course helpful.

云桥网络 为三维动画制作,游戏开发员、影视特效师等CG艺术家提供视频教程素材资源!

1、登录后,打赏30元成为VIP会员,全站资源免费获取!

2、资源默认为百度网盘链接,请用浏览器打开输入提取码不要有多余空格,如无法获取 请联系微信 yunqiaonet 补发。

3、分卷压缩包资源 需全部下载后解压第一个压缩包即可,下载过程不要强制中断 建议用winrar解压或360解压缩软件解压!

4、云桥网络平台所发布资源仅供用户自学自用,用户需以学习为目的,按需下载,严禁批量采集搬运共享资源等行为,望知悉!!!

5、云桥网络-CG数字艺术学习与资源分享平台,感谢您的赞赏与支持!平台所收取打赏费用仅作为平台服务器租赁及人员维护资金 费用不为素材本身费用,望理解知悉!